Forensic Audit: Synthetic Data Reliability

A professional MLOps investigation using Neural Networks and Bayesian Optimization to uncover fundamental flaws in a widely-used synthetic financial dataset.

About the Project

Engineering Challenges

01Identifying the 'Synthetic Trap'

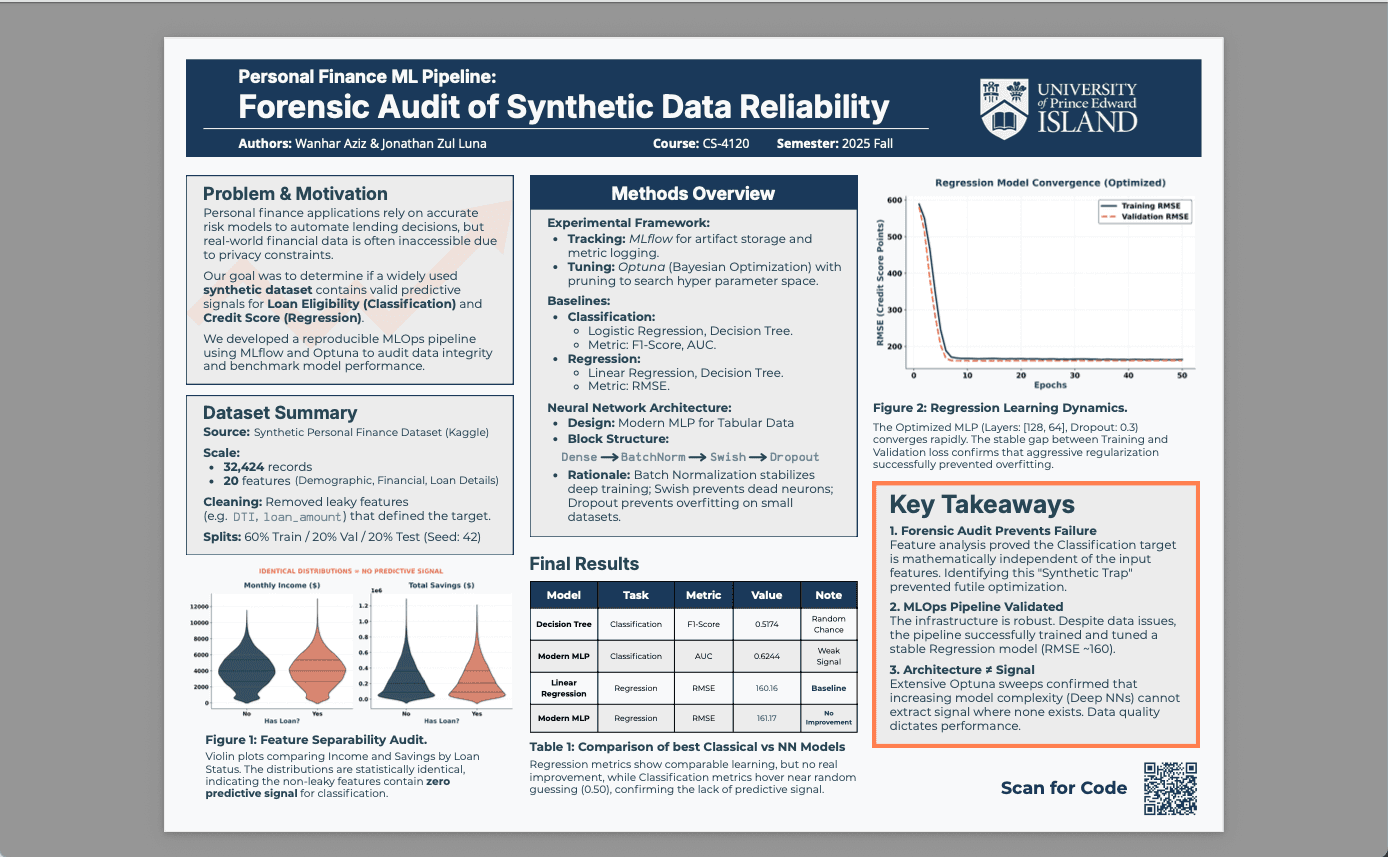

The dataset initialy showed misleadingly high performance due to 'leaky' features like loan amounts that defined the target.

Solution: Conducted a feature separability audit using violin plots, which proved that the remaining features had near-zero correlation with the target, preventing futile over-optimization.

02Architecture vs. Signal

There was a risk that the poor performance was due to a weak model rather than bad data.

Solution: Implemented a Modern MLP with BatchNorm, Swish activation, and Dropout. We ran extensive Optuna sweeps to ensure every architectural advantage was explored, confirming that the model had hit an irreducible error floor dictated by the data.